¶ Project Abstract

Many people are unable to interact with a computer because of a physical or neurological disability. Most individuals, however, retain control of eye movements. With appropriate technology, eye movements can be used for effective and intuitive interfacing with a computer.

Many people are unable to interact with a computer because of a physical or neurological disability. Most individuals, however, retain control of eye movements. With appropriate technology, eye movements can be used for effective and intuitive interfacing with a computer.

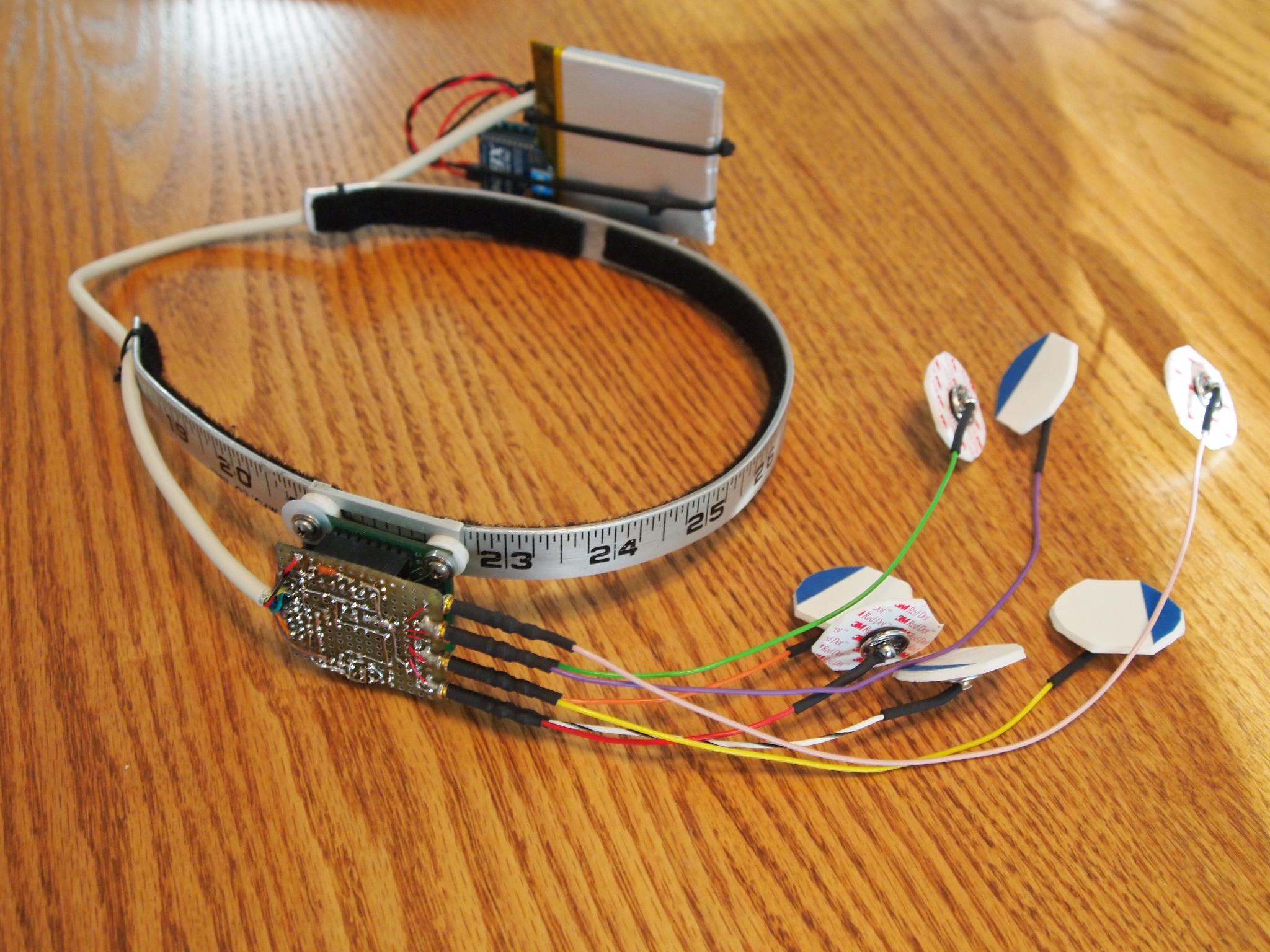

The project goal was to design and build a wireless human computer interface using electrooculography (EOG) based used to control a computer or assistive device. We pursued a wireless, wearable design that was light weight, comfortable, user friendly, inexpensive, and practical for many different users and applications. TI’s ADS1298 ADC was used to measure biopotential signals produced by eye position. An Atmel ATmega328p microcontroller interfaced the ADS1298 with a computer wirelessly from a PCB attached to a visor. Computer software (Matlab, C#) was written to interpret the signals from the eye to determine eye position. When combined with head tracking position/orientation data, the direction of a person’s gaze could be found and used for useful applications, such as moving a cursor.

With continued development, our EOG-based human computer interface could be useful for individuals who are otherwise unable to control a computer, or even as an interface with an assistive device.

¶ Awards

¶ 2012 Rochester Regional Science Fair Awards

- First Place Paper

- First ISEF Award

¶ 2012 State Junior Science and Humanities Symposium (JSHS)

- First Place Finalist

¶ 2012 Intel International Science and Engineering Fair (ISEF)

- Second Grand Award

¶ 2012 National JSHS

- First Place in Engineering